Deploying large language models (LLMs) like the newly released GPT-OSS 20B on a platform like Red Hat OpenShift AI offers a robust and scalable solution for production environments but it can take time before you are able to run it with the out-of-the-fox vLLM serving runtime. If you are like me and want to try the latest and greatest to not feel so left out we can use a custom Serving Runtime. This guide outlines the process of deploying a GPT-OSS model by creating and configuring a custom Serving Runtime on OpenShift AI.

The Challenge: Model-Version Mismatch

As new open-source models like GPT-OSS are released, they often introduce new architectures and optimizations that require the latest versions of inference servers to function correctly. A prime example is the newly released GPT-OSS 20B and 120B models.

Unfortunately, the default vLLM Serving Runtime version 0.9.0.1 provided out-of-the-box with OpenShift AI, does not have the necessary support for these new models. Attempting to deploy the GPT-OSS model on this older runtime will have to running into errors due to it’s new architecture GptOssForCausalLM.

To solve this, we must create a custom Serving Runtime using a newer version of vLLM, specifically 0.10.1+gptoss, which has been updated to include support for the GPT-OSS model’s unique architecture.

Understanding the Components

Before we dive into the steps, it’s important to understand the core components involved:

- OpenShift AI: This is a platform for building, training, and serving AI/ML models. It provides the infrastructure, tools, and a web console to manage the entire AI lifecycle.

- Serving Runtime: This is the container image that serves your model. It includes the inference server, model files (if you’d like), and any necessary dependencies. Using a custom runtime allows you to use a specific inference server, like vLLM or Hugging Face Text Generation Inference, which are optimized for LLM serving.

- GPT-OSS: This is a new open-source LLM series, and for this guide, we’ll provide you a OCI container containing the new 20B model. (I’m GPU poor, so it’s all I can test today…)

- InferenceService: This is a Kubernetes custom resource that defines the deployment of your model. It specifies the Serving Runtime to use, the model’s location, and other serving configurations.

Step 1: Using a Pre-built Container Image

To make things easier, we’ll use my pre-built container image from quay.io that already includes a compatible vLLM version. This bypasses the need for you to build the image yourself, allowing you to get up and running faster.

quay.io/castawayegr/vllm:0.10.1-gptoss

This image is configured with vLLM version 0.10.1 and is ready to serve GPT-OSS models.

Step 2: Defining the Custom Serving Runtime on OpenShift AI

Once your image is ready, you need to define the custom Serving Runtime in your OpenShift AI project. This tells the platform how to use your custom image to serve models.

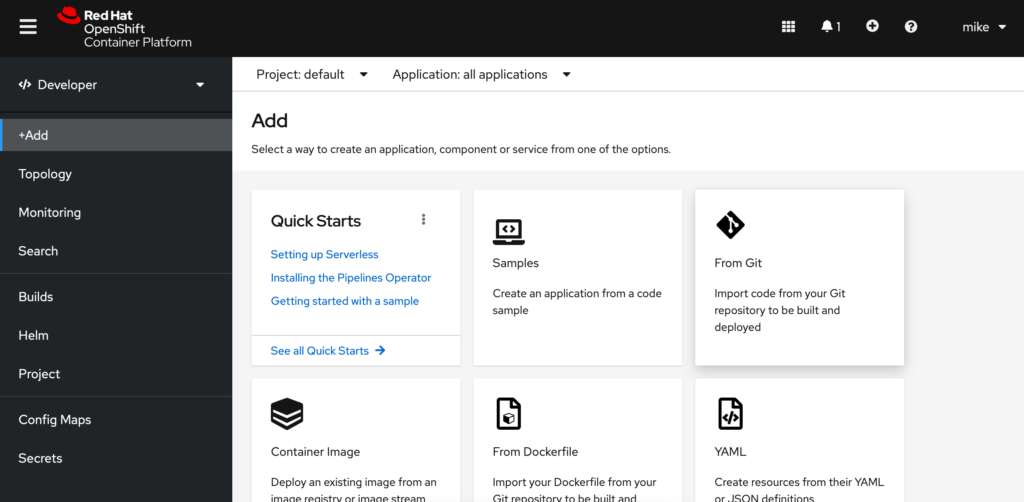

- Navigate to the OpenShift AI Web Console: Log in with a user who has admin privileges.

- Access Serving Runtimes: Go to Settings -> Serving Runtimes and click Add Serving Runtime.

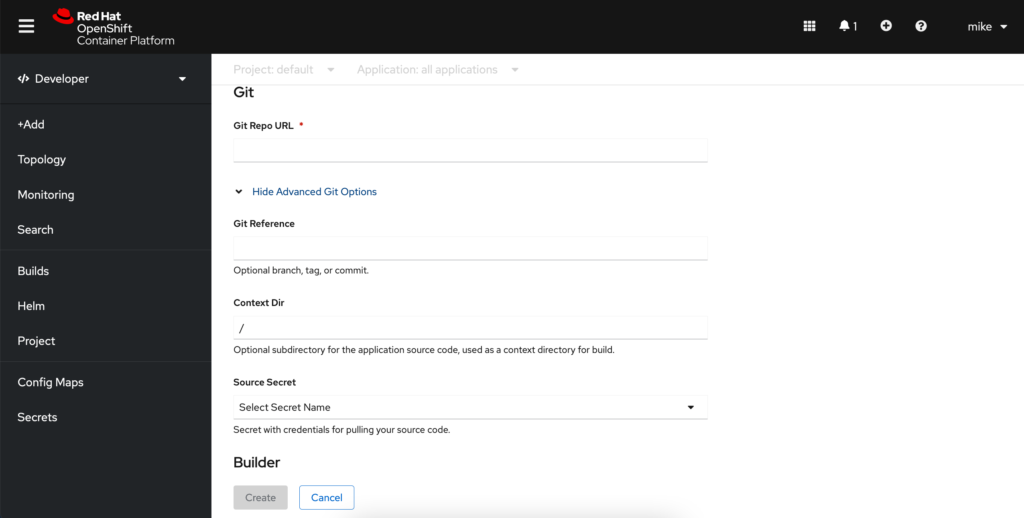

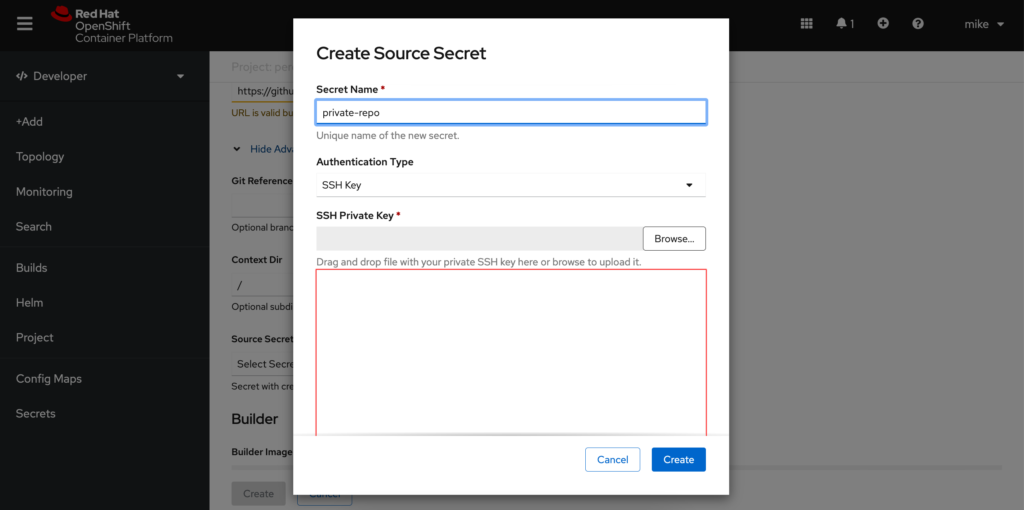

- Create from scratch: You will use a YAML definition to configure the runtime. Paste the YAML below.

YAML

apiVersion: serving.kserve.io/v1alpha1

kind: ServingRuntime

metadata:

annotations:

opendatahub.io/recommended-accelerators: '["nvidia.com/gpu"]'

opendatahub.io/runtime-version: v0.10.1

openshift.io/display-name: GPT-OSS vLLM with BitsandBytes NVIDIA GPU ServingRuntime for KServe

opendatahub.io/apiProtocol: REST

labels:

opendatahub.io/dashboard: "true"

name: vllm-cuda-runtime-gptoss

spec:

annotations:

prometheus.io/path: /metrics

prometheus.io/port: "8080"

containers:

- args:

- --port=8080

- --model=/mnt/models

- --served-model-name={{.Name}}

command:

- python

- -m

- vllm.entrypoints.openai.api_server

env:

- name: HF_HOME

value: /tmp/hf_home

image: quay.io/castawayegr/vllm:0.10.1-gptoss

name: kserve-container

ports:

- containerPort: 8080

protocol: TCP

multiModel: false

supportedModelFormats:

- autoSelect: true

name: vLLM

Apply this YAML to your cluster, and the new runtime will appear in the list of available Serving Runtimes.

Step 3: Deploying the GPT-OSS Model via the Web Console

With the custom Serving Runtime now available, you can easily deploy your GPT-OSS model using the OpenShift AI web console.

- Go to the Models and Model Servers page: From the OpenShift AI dashboard, navigate to the Models and Model Servers section.

- Start the deployment process: Click the Deploy model button.

- Fill out the deployment form: You’ll be presented with a form to configure your model deployment.

- Model Name: Give your model a unique name, e.g.,

gpt-oss. - Model Server Size: Select an appropriate size for your model. I used the Large size in my deployment but do what’s best for your environment.

- Accelerator: Select NVIDIA GPU for your accelerator.

- Serving Runtime: This is the most crucial step! From the dropdown menu, select the new custom runtime you created in Step 2: GPT-OSS vLLM with BitsandBytes NVIDIA GPU ServingRuntime for KServe.

- Model Location: You will need to specify the storage location of your model weights. Select an existing data connection (S3, PVC, etc.) or create a new one. For my deployment I have a connection for quay.io. Selecting that gives me the option for OCI storage location, here I will input OCI image I created for the 20B model:

quay.io/castawayegr/modelcar-catalog:gpt-oss-20b - Configuration parameters: You will need to add Additional serving runtime arguments that at minimal point to chat template for the model. If you are using my modecar image from step 8, you can just add the following:

Note: Using a 16GB vRAM GPU you won’t get a lot of context length, vLLM automatically calculated about 464 tokens for me. Adjust the –max-model-len for your hardware.--chat-template=/app/data/template/template_gptoss.jinja

--max-model-len=464 - Deploy the model: Click Deploy. OpenShift AI will now use your custom runtime to create a pod, load the GPT-OSS model, and expose it as a scalable service. You can monitor the deployment status on the Models and Model Servers page.

If all goes well, your endpoint should become available and you now can test GPT-OSS on OpenShift AI!

Conclusion

By using my pre-built container image and the user-friendly OpenShift AI web console, you can quickly and efficiently deploy the newly released GPT-OSS models!