Edit: 7/4/2020 – Updated and preferred install guide here.

We will be using CentOS 8 as our base operating system to install Ovirt v4.4.0 self hosted engine today. As a matter of fact CentOS 7 is not supported as a base OS starting with version 4.4.0 and future revisions. So once we have our OS installed, let’s start by making sure we are up to date.

sudo yum update -y && sudo yum install tmux -y

To install Ovirt 4.4 we need to setup the repository to install it and all of it’s dependencies.

sudo yum localinstall -y https://resources.ovirt.org/pub/yum-repo/ovirt-release44.rpm

Now that we have the repo, we need we can continue with our installing the hosted engine setup tool.

sudo yum install -y ovirt-hosted-engine-setup

Before continuing I recommend you picking a static IP to assign to your Ovirt manager VM and pointing your hostname to it via your DNS server of choice before running the installation process.

Once you have that all squared away you are free to begin the deployment process.

tmux

sudo hosted-engine --deploy

Follow along and answer all the question prompts. It will take the install process awhile depending on hardware specs.

Once the Ovirt manager VM is successfully deployed it will ask you for the storage domain you would like to use. Enter your storage of choice, again we are using NFS in this example.

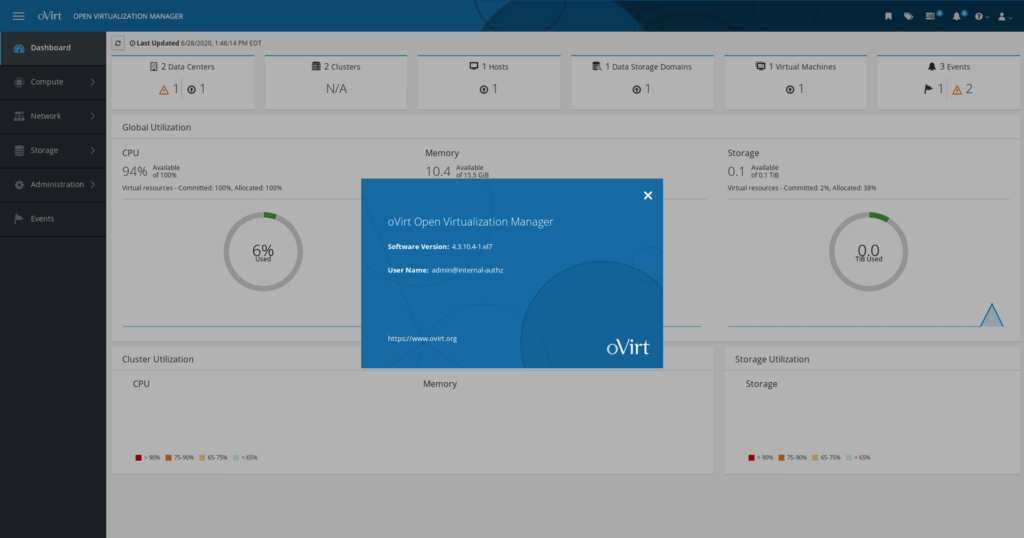

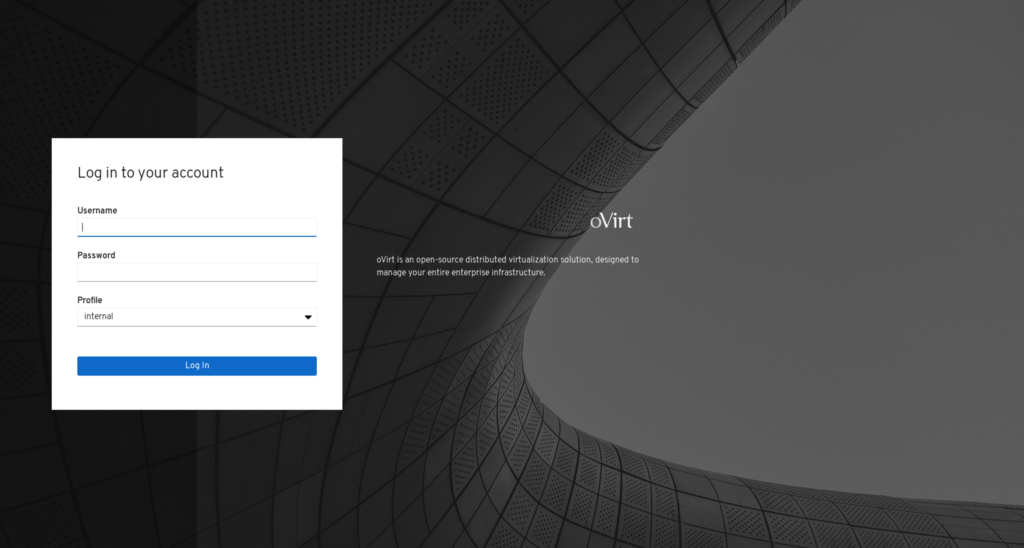

When finished you may login using username/password at the hostname you configured for the Ovirt manager VM during deployment.

Happy virtualizing!

-Mike